In the fast-paced world of digital marketing, staying ahead of the competition is crucial. One way to gain a competitive edge is through A/B testing. But what exactly is A/B testing? And how can it be used effectively to optimize your marketing efforts?

A/B testing, also known as split testing, is a method of comparing two versions of a webpage or marketing campaign to determine which one performs better. By dividing your audience into two groups and exposing them to different variations, you can gather data and insights on what resonates with your target audience.

The role of A/B testing in digital marketing is significant. It allows marketers to make data-driven decisions, improve user engagement, reduce bounce rates, and ultimately increase conversion rates. By testing different elements such as headlines, call-to-action buttons, layouts, or even pricing strategies, you can fine-tune your marketing efforts to maximize results.

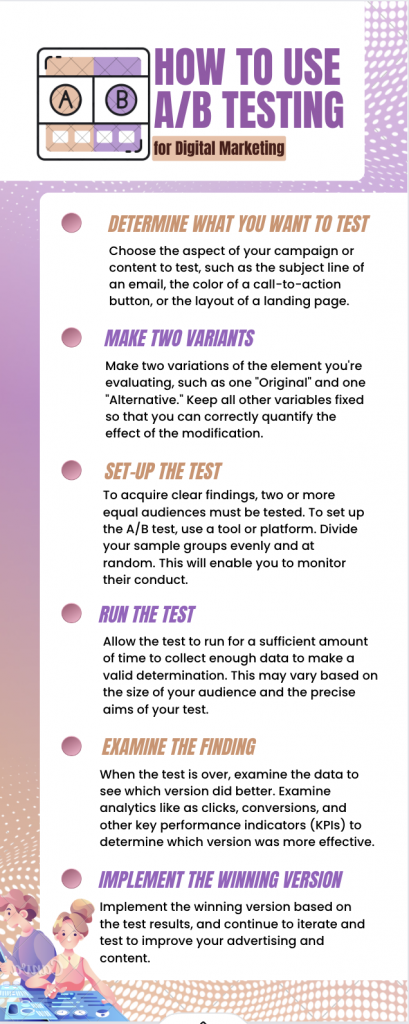

Implementing A/B testing involves several key steps. First, you need to identify the element you want to test. This could be anything from the color scheme of a website to the subject line of an email campaign. Once you have identified the element, you can develop a hypothesis based on your goals and insights. This hypothesis will guide the creation of the A/B variants that you will test.

Executing the test involves randomly assigning your audience into two groups and exposing them to the different variants. It is essential to ensure that the test is executed simultaneously to eliminate any external factors that could skew the results. Once the test is complete, it is time to analyze the results. This involves comparing the performance of each variant and determining which one achieved the desired outcome.

To make the most out of A/B testing, there are some best practices to keep in mind. Testing one change at a time allows you to isolate variables and accurately determine the impact of each element. Additionally, ensuring that your sample size is large enough will provide more reliable results. It is also crucial to allow enough time for the test to run, as rushing it may lead to inaccurate conclusions. Lastly, using statistical analysis techniques will help you interpret the data and make informed decisions.

While A/B testing can be a powerful tool, it is essential to avoid common mistakes. Not testing simultaneously can lead to skewed results as external factors may influence one variant more than the other. Ignoring small changes may seem insignificant, but even minor tweaks can have a significant impact on user behavior. Stopping tests too early can result in missed opportunities for optimization, while not accounting for external factors can lead to inaccurate conclusions.

In conclusion, A/B testing is a valuable technique that allows marketers to make data-driven decisions and optimize their digital marketing efforts. By understanding the fundamentals, implementing the test effectively, following best practices, and avoiding common mistakes, you can harness the power of A/B testing to drive better results and stay ahead in the competitive digital landscape.

Understanding the Fundamentals: What is A/B Testing?

A/B testing, also known as split testing, is a method used to compare two different versions of a webpage or marketing campaign to determine which one performs better in terms of user engagement and conversion rates. It involves dividing your audience into two groups and exposing each group to a different variant, with the goal of identifying the variant that generates the most favorable response.

The concept behind A/B testing is relatively straightforward. Instead of making assumptions or relying on intuition, A/B testing allows you to gather concrete data and insights about your target audience’s preferences and behaviors. By systematically testing different variations of a webpage or marketing element, you can make informed decisions based on real-world data rather than guesswork.

To illustrate this concept, let’s consider an example. Suppose you have an e-commerce website and want to improve the conversion rate of your product landing page. With A/B testing, you can create two different versions of the landing page – variant A and variant B. Half of your website visitors will be randomly assigned to view variant A, while the other half will see variant B. By tracking and analyzing user interactions, you can determine which variant leads to higher conversion rates, such as more purchases or sign-ups.

A/B testing can be applied to various elements of your marketing strategy, including website design, ad copy, email campaigns, pricing strategies, and more. It allows you to evaluate different aspects of your marketing efforts and optimize them based on real-time data.

One of the key advantages of A/B testing is that it eliminates guesswork and subjective opinions from the decision-making process. Instead, it relies on objective data to guide your marketing strategies. By continuously testing and refining your marketing elements, you can achieve continuous improvement and drive better results.

It’s important to note that A/B testing requires a structured approach to ensure accurate results. This involves defining clear goals, formulating hypotheses, carefully selecting the elements to test, executing the test properly, and analyzing the data objectively. By following these fundamental principles, you can gain valuable insights into your audience’s preferences and behaviors, enabling you to make data-driven decisions that lead to improved marketing performance.

In the following sections, we will delve deeper into the role of A/B testing in digital marketing and explore how to effectively implement and utilize this technique to optimize your marketing efforts.

The Role of A/B Testing in Digital Marketing

A/B testing plays a crucial role in digital marketing by enabling marketers to optimize their strategies and improve overall campaign performance. It offers several benefits that can have a significant impact on user engagement, bounce rates, and conversion rates. In this section, we will explore the role of A/B testing in digital marketing and how it can benefit your marketing efforts.

Improved User Engagement

One of the primary goals of digital marketing is to engage and capture the attention of your target audience. A/B testing allows you to experiment with different elements of your marketing campaigns, such as headlines, images, or calls-to-action, to determine which combination resonates best with your audience. By identifying the most effective elements, you can create a compelling user experience that captures attention, encourages interaction, and keeps visitors engaged with your brand.

Reduced Bounce Rates

Bounce rates refer to the percentage of visitors who leave a website without taking any further action. High bounce rates can indicate that your website or landing page is not effectively engaging visitors or meeting their expectations. A/B testing can help you identify the factors that contribute to high bounce rates and make necessary improvements. By testing different layouts, content placement, or navigation options, you can create a more user-friendly and engaging experience that reduces bounce rates and encourages visitors to explore further.

Increased Conversion Rates

Conversion rates are a critical metric in digital marketing, as they reflect the percentage of visitors who complete a desired action, such as making a purchase, submitting a form, or signing up for a newsletter. A/B testing can significantly impact conversion rates by optimizing various elements of your marketing campaigns. By testing different variations of your call-to-action buttons, form designs, or pricing strategies, you can identify the most effective combinations that drive higher conversion rates. This can lead to increased sales, improved lead generation, and overall campaign success.

In summary, A/B testing plays a vital role in digital marketing by improving user engagement, reducing bounce rates, and increasing conversion rates. By systematically testing different variations of your marketing elements, you can gain valuable insights into what resonates best with your audience and make data-driven decisions that optimize your marketing efforts. In the next section, we will explore the step-by-step process of implementing A/B testing to achieve these benefits.

How to Implement A/B Testing

Implementing A/B testing involves a systematic process that allows you to test and compare different variations of your marketing elements. By following the steps outlined below, you can effectively implement A/B testing and gather valuable insights to optimize your marketing efforts.

Identifying the Element to Test

The first step in implementing A/B testing is to identify the specific element or elements that you want to test. This could be anything from the layout of a webpage, the headline of an email, or the design of a call-to-action button. By focusing on a single element, you can isolate variables and accurately assess its impact on user behavior and conversion rates.

Developing a Hypothesis

Once you have identified the element to test, it’s important to develop a hypothesis. A hypothesis is a statement that predicts the expected outcome of the test. It helps guide your testing process and provides a clear objective for the experiment. For example, if you are testing different headlines for a blog post, your hypothesis could be that a more compelling headline will result in higher click-through rates.

Creating the A/B Variants

With your hypothesis in mind, it’s time to create the A/B variants. This involves developing different versions of the element you are testing. For example, if you are testing a call-to-action button, you might create two variations with different colors, text, or placement. It’s important to ensure that the variants are significantly different from each other to generate meaningful data and insights.

Executing the Test

Once you have created the A/B variants, it’s time to execute the test. This involves randomly assigning your audience into two groups – Group A and Group B. Group A will be exposed to one variant (A), while Group B will be exposed to the other variant (B). It is crucial to ensure that the test is executed simultaneously to eliminate any external factors that could skew the results. This can be done using A/B testing software or tools.

Analyzing the Results

After the test has run for a sufficient period, it’s time to analyze the results. This involves comparing the performance of each variant based on the metrics you are tracking, such as click-through rates, conversion rates, or engagement metrics. Statistical analysis techniques can be used to determine if the differences between the variants are statistically significant. This analysis will help you identify the winning variant and make data-driven decisions to optimize your marketing efforts.

By following these steps, you can effectively implement A/B testing and gain valuable insights into what resonates best with your audience. In the next section, we will explore some best practices to consider when conducting A/B tests to ensure accurate results and maximize the effectiveness of your testing efforts.

Best Practices in A/B Testing

To ensure accurate and reliable results from your A/B testing efforts, it’s essential to follow certain best practices. These practices will help you conduct effective tests, make data-driven decisions, and optimize your marketing strategies. Here are some best practices to consider when conducting A/B tests:

Test One Change at a Time

To accurately measure the impact of a specific element, it’s crucial to test only one change at a time. This allows you to isolate variables and attribute any variations in performance to the specific change being tested. If you make multiple changes simultaneously, it becomes difficult to determine which change influenced the results. By testing one change at a time, you can gather clear and actionable insights.

Ensure Your Sample Size is Large Enough

The sample size refers to the number of participants or visitors included in your A/B test. It’s important to ensure that your sample size is large enough to generate statistically significant results. A larger sample size reduces the chances of random variations skewing the results and makes the findings more reliable. Calculate the minimum sample size needed to achieve statistical significance based on your expected effect size, confidence level, and desired statistical power.

Allow Enough Time for the Test

A/B testing requires sufficient time to gather meaningful data and achieve statistically significant results. Rushing the test or ending it prematurely can lead to inaccurate conclusions. Consider factors such as your website traffic volume and the expected impact of the change being tested to determine an appropriate test duration. Generally, it is recommended to run tests for at least one to two weeks to account for any weekly or seasonal variations in user behavior.

Use Statistical Analysis

Statistical analysis is critical in A/B testing to determine the significance of the results. Use statistical techniques such as hypothesis testing, confidence intervals, and p-values to evaluate the differences between the variants. This helps you determine if the observed variations in performance are statistically significant or simply due to chance. Statistical analysis provides a solid foundation for making informed decisions based on the test results.

Document and Learn from Your Tests

Keep a record of your A/B tests, including the elements tested, the hypotheses, the variants, and the results. This documentation helps you track your testing history and learn from past experiments. Analyze the results of your tests, even if they do not show a statistically significant difference, as you can still gain insights and learn from the outcomes. This iterative process of testing, learning, and refining allows you to continually optimize your marketing strategies.

By following these best practices, you can conduct A/B tests effectively and obtain meaningful insights to enhance your marketing efforts. In the next section, we will discuss common mistakes to avoid when conducting A/B tests to ensure accurate results.

Common Mistakes in A/B Testing

While A/B testing can be a powerful tool for optimizing your marketing efforts, there are common mistakes that marketers should be aware of and avoid. These mistakes can lead to inaccurate results and hinder the effectiveness of your A/B tests. By understanding these common pitfalls, you can ensure that your A/B testing process is accurate and reliable. Here are some common mistakes to avoid:

Not testing simultaneously

One of the fundamental principles of A/B testing is to ensure that both variants are tested simultaneously. Testing variants at different times can introduce external factors that may influence the results. Factors such as changes in user behavior, market conditions, or seasonality can impact the performance of your variants. To obtain accurate results, it is crucial to test the variants concurrently.

Ignoring small changes

Sometimes, small changes may seem insignificant, but they can have a significant impact on user behavior and conversion rates. Ignoring small changes and only focusing on major modifications can lead to missed optimization opportunities. Every element of your marketing strategy can potentially impact your results. Therefore, it is essential to test and analyze even minor variations to identify their effect on user behavior.

Stopping tests too early

A/B tests require sufficient time to gather statistically significant results. Ending tests prematurely can lead to inconclusive or inaccurate findings. It’s important to allow your tests to run for an appropriate duration to capture enough data and account for any variations or trends. Make sure to define a set test duration or use statistical methods to determine when to stop the test based on reaching statistical significance.

Not accounting for external factors

External factors, such as holidays, events, or changes in market trends, can influence user behavior and impact the performance of your variants. Failing to account for these external factors can lead to misleading conclusions. It’s important to consider the context in which your tests are conducted and analyze the results with an understanding of any potential external influences.

By avoiding these common mistakes, you can ensure that your A/B testing process is accurate, reliable, and provides meaningful insights. Remember to test simultaneously, consider even small changes, allow sufficient test duration, and account for external factors. A well-executed A/B testing strategy can help you optimize your marketing efforts and drive better results.

In conclusion, A/B testing is a valuable technique in digital marketing, allowing you to make data-driven decisions and optimize your campaigns. By understanding the fundamentals, implementing the tests effectively, following best practices, and avoiding common mistakes, you can harness the power of A/B testing to gain valuable insights and improve your marketing performance.

Ready to blow up your business? Contact Soulweb today for help with all of your marketing needs.